Streamlining Functional Verification for Multi-Die and Chiplet Designs

An Opportunity and a Challenge

The manufacturing aspects of multi-die/multi-chiplet designs are often highlighted, but what about verification? Functional correctness and performance of inter-die connections via a standard interface, such as UCIe or a custom inter-die interface, are not guaranteed to meet all system requirements. These interfaces must be verified comprehensively, ensuring coverage goals are achieved as the system evolves, as we demand for each component in a multi-die system.

However, this objective presents significant scaling challenges, as an integrated multi-die top-level must be created, debugged, and verified. The simulation size will be very large, which is not a problem for an emulation system such as Palladium or an FPGA-based prototyper such as Protium™; however, those premium engines are likely to be assigned to mid- to late-stage large system verification, validation, and software bring up rather than at the earlier stages of the design cycle.

Most of the verification workload depends on simulators such as Xcelium™, which perform power regression testing for individual IP, subsystems, and subsystems on the die. These regressions load compute farms with thousands of runs, repeating continuously through the development cycle. Compute farms built around servers with a mix of performance and capacity profiles are dictated by engineering needs and also budget constraints. Few, if any, servers in a typical farm would be large enough to handle a full multi-die system simulation.

A second problem is the effort required to build a top-level testbench incorporating the full system. This type of testing aims to prove interoperability between dies: inter-die communication and register/memory updates, validating that the functionality proven per-die against VIP sequence generators continues to operate correctly when the same traffic is instead driven from interconnected dies. Design teams working in this area report that creating and debugging a limited multi-die top-level can take several weeks, over and above the effort already put into creating each die’s individual testbench.

A third consideration is that a serial approach to interoperability testing is high risk. In every other aspect of design and verification, we work hard to shift left, to find and fix issues before they become a crisis. This shift-left philosophy is just as important in multi-die and chiplet system design verification. We must run these interoperability tests early in the design, even before the interposer connectivity is designed (typically expected quite late in the design cycle). This testing does not need to wait for all the die system models to be available. Reasonable tests should be able to be run between whatever die models are currently available.

Distributed Simulation to the Rescue

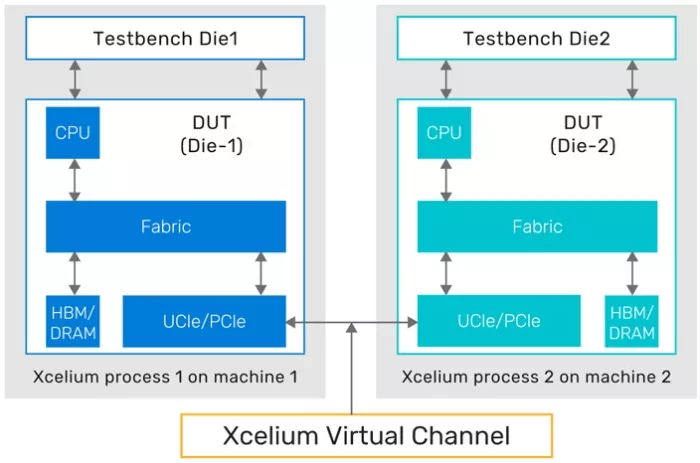

Functionality per die/chiplet has already been proven on available compute resources, against the respective testbenches. However, problems can occur when you assemble these designs together into a system-in-package (SiP), for which you must also create a new testbench. There is a better approach—the Xcelium Distributed Simulation Verification App will run the die simulations on independent compute processes in parallel, connecting them through Virtual Channels abstracting the RTL bus interfaces, allowing the individual component testbenches to become a connected yet distributed system.

A bit of adaptation is necessary to bring this to fruition, but with significantly reduced effort than would be needed to construct a new top-level testbench for the system. Customer experience with the App indicates that adaptations of this type will take, at most, a few days.

Design Verification engineers are pursuing a wide range of interoperability tests based on this capability. These include interoperability tests for: system scenarios, such as control, register access, concurrency; die-to-die testing such as CRC and retry, parity and error scenarios; protocol scenarios such as host access across chiplets and managing data workloads with concurrent traffic; physical layer checks such as scrambling/descrambling, different lane speeds, and lane repair. These are the types of tests you need to signoff for multi-die integration.

Let’s step back to appreciate what distributed simulation with these characteristics makes possible. Multiple Xcelium chiplet sims run concurrently but in separate processes, driven by the testbenches you have already built and debugged for each individual die, with communication managed through Virtual Channels, synchronizing only as necessary. These simulations will run at optimum throughput as a standalone test with brief interrupts for simulation linking at the transaction updates.

Notably, this level of testing can start whenever models and test benches are available for two or more of the dies. Top-level verification immediately shifts left, up to three months earlier than the interposer design becomes available, as seen in customer trials.

Minimal effort is required to enable this flow, which leverages the work done for die-level verification. All that is needed to build the system are a few conditional compile switches around external traffic generators for standalone testing and to set memory map offsets, as well as some API calls to define the Xcelium Virtual Channel. The Xcelium Distributed Simulation Verification App will take care of the rest.

Performance

An obvious concern for some readers would be the performance implications of Virtual Channel communication between simulation processes. Do these channels add latency to significantly drag down simulation performance? Live testing within Cadence and with early adopters shows otherwise. Experience has shown performance up to 3X faster versus an integrated top-level simulation. In other words, while there is surely some overhead in the interprocess communication, performance benefits still outweigh integrated design performance, especially for the range of interoperability testing now being considered.

Can Distributed Simulation Help with Large Single Die Verification?

As multi-die systems grow larger, the subsystems/die around which they are built will also grow in size, further stressing available compute hardware, testbench development, and debug overhead. Could distributed simulation help design and verification teams manage this growing complexity by allowing a design on a single die to be managed as two or more large blocks, each with its own testbench, and then distributing this simulation in a similar way?

Care must be taken on where such splits are made, especially from the performance perspective. Channels might be limited in handling communication between blocks; shared memory may be a better approach (an option already supported in this Xcelium App). Even then, communication requiring cycle-by-cycle synchronization may be problematic. Ideally, links should still be transaction-oriented, allowing the app to let block simulations run asynchronously, except where required to synchronize through a transaction.

Distributed simulation for single die designs is drawing attention from multiple design teams and is in active development to find the right tradeoffs to deliver optimum value.

Takeaways

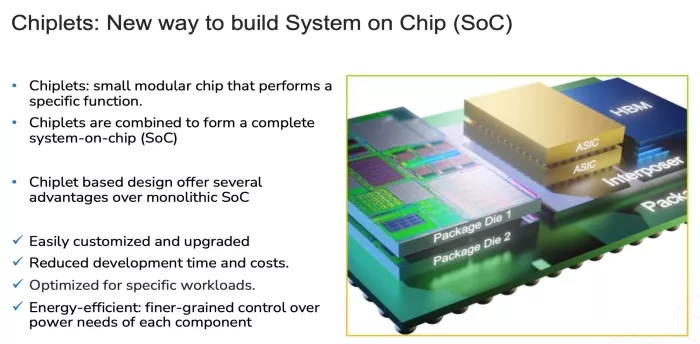

Multi-die implementations are the new reality for the high-performance, high-functionality systems we depend on for today’s advanced electronics. Much has been written and developed around methods to manage the complexity in building, characterizing, and optimizing the physical implementation of such systems, but little so far on the challenges in assuring signoff functional quality. The Xcelium Distributed Simulation Verification App provides the first truly scalable answer to meet this need.

Fully elaborated simulations can be distributed across on-premises or cloud servers, ensuring unbounded compute scalability. Just as important, test benches used to verify the systems on each die can be reused with only minor modifications for full system verification.

The Xcelium Distributed Simulation Verification App offers a true breakthrough in enabling full multi-die system functional verification, enabling early testing long before interposer characteristics are pinned down, and making all this possible without requiring new test benches.

Related Chiplet

- DPIQ Tx PICs

- IMDD Tx PICs

- Near-Packaged Optics (NPO) Chiplet Solution

- High Performance Droplet

- Interconnect Chiplet

Related Blogs

- Synopsys and Alchip Accelerate IO & Memory Chiplet Design for Multi-Die Systems

- A Beginner’s Guide to Chiplets: 8 Best Practices for Multi-Die Designs

- Podcast: How Achronix is Enabling Multi-Die Design and a Chiplet Ecosystem with Nick Ilyadis

- Chiplet Summit 2024: Opportunities, Challenges, and the Path Forward for Chiplets