Optimizing Attention on GPUs by Exploiting GPU Architectural NUMA Effects

By Mansi Choudhary 1, Karthik Sangaiah 2, Sonali Singh 2, Muhammad Osama 2, Lisa Wu Wills 3, Ganesh Dasika 2

1 Department of ECE, Duke University, Durham, USA

2 Advanced Micro Devices Inc., Santa Clara, USA

3 Department of Computer Science, Duke University, Durham, USA

Abstract

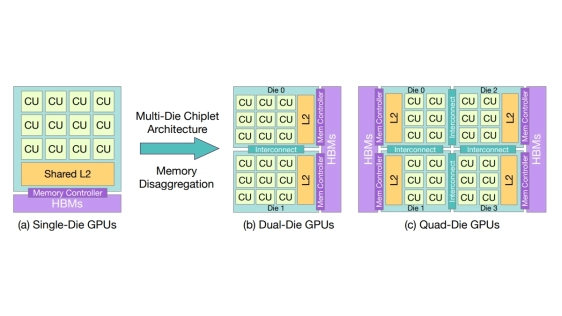

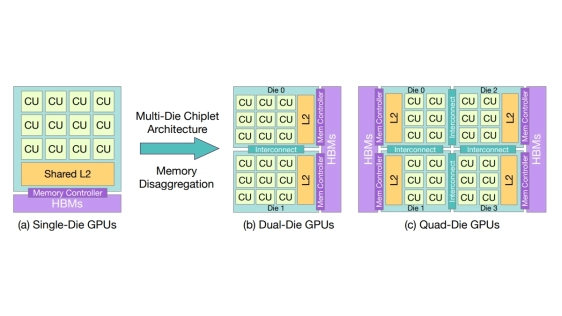

The rise of disaggregated AI GPUs has exposed a critical bottleneck in large-scale attention workloads: non-uniform memory access (NUMA). As multi-chiplet designs become the norm for scaling compute capabilities, memory latency and bandwidth vary sharply across compute regions, undermining the performance of traditional GPU kernel scheduling strategies that assume uniform memory access. We identify how these NUMA effects distort locality in multi-head attention (MHA) and present Swizzled Head-first Mapping, a spatially-aware scheduling strategy that aligns attention heads with GPU NUMA domains to exploit intra-chiplet cache reuse. On AMD's MI300X architecture, our method achieves up to 50% higher performance over state-of-the-art attention algorithms using conventional scheduling techniques and sustains consistently high L2 cache hit rates of 80-97%. These results demonstrate that NUMA-aware scheduling is now fundamental to achieving full efficiency on next-generation disaggregated GPUs, offering a path forward for scalable AI training and inference.

The rise of disaggregated AI GPUs has exposed a critical bottleneck in large-scale attention workloads: non-uniform memory access (NUMA). As multi-chiplet designs become the norm for scaling compute capabilities, memory latency and bandwidth vary sharply across compute regions, undermining the performance of traditional GPU kernel scheduling strategies that assume uniform memory access. We identify how these NUMA effects distort locality in multi-head attention (MHA) and present Swizzled Head-first Mapping, a spatially-aware scheduling strategy that aligns attention heads with GPU NUMA domains to exploit intra-chiplet cache reuse. On AMD's MI300X architecture, our method achieves up to 50% higher performance over state-of-the-art attention algorithms using conventional scheduling techniques and sustains consistently high L2 cache hit rates of 80-97%. These results demonstrate that NUMA-aware scheduling is now fundamental to achieving full efficiency on next-generation disaggregated GPUs, offering a path forward for scalable AI training and inference.

To read the full article, click here

Related Chiplet

- Interconnect Chiplet

- 12nm EURYTION RFK1 - UCIe SP based Ka-Ku Band Chiplet Transceiver

- Bridglets

- Automotive AI Accelerator

- Direct Chiplet Interface

Related Technical Papers

- Leveraging Chiplet-Locality for Efficient Memory Mapping in Multi-Chip Module GPUs

- REX: A Remote Execution Model for Continuos Scalability in Multi-Chiplet-Module GPUs

- Chiplets on Wheels : Review Paper on holistic chiplet solutions for autonomous vehicles

- Chiplet-Gym: Optimizing Chiplet-based AI Accelerator Design with Reinforcement Learning

Latest Technical Papers

- Chiplet technology for large-scale trapped-ion quantum processors

- REX: A Remote Execution Model for Continuos Scalability in Multi-Chiplet-Module GPUs

- A 3D-integrated BiCMOS-silicon photonics high-speed receiver realized using micro-transfer printing

- AccelStack: A Cost-Driven Analysis of 3D-Stacked LLM Accelerators

- ATMPlace: Analytical Thermo-Mechanical-Aware Placement Framework for 2.5D-IC