Taming the Tail: NoI Topology Synthesis for Mixed DL Workloads on Chiplet-Based Accelerators

By Arnav Shukla 1, Harsh Sharma 2, Srikant Bharadwaj 3, Vinayak Abrol 1, Sujay Deb 1

1 Indraprastha Institute of Information Technology Delhi, New Delhi, India

2 Washington State University, Pullman, Washington, USA

3 Microsoft Research, Redmond, Washington, USA

Abstract

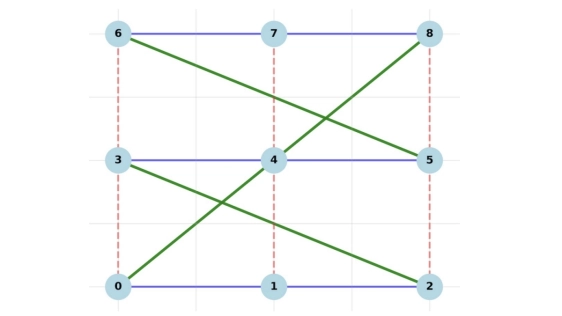

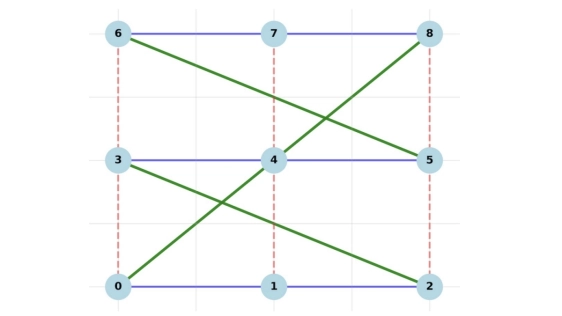

Heterogeneous chiplet-based systems improve scaling by disaggregating CPUs/GPUs and emerging technologies (HBM/DRAM). However this on-package disaggregation introduces a latency in Network-on-Interposer (NoI). We observe that in modern large model inference, parameters and activations routinely move back and forth from HBM/DRAM, injecting large, bursty flows into the interposer. These memory-driven transfers inflate tail latency and violate Service Level Agreements (SLAs) across k-ary n-cube baseline NoI topologies. To address this gap we introduce an Interference Score (IS) that quantifies worst-case slowdown under contention. We then formulate NoI synthesis as a multi-objective optimization (MOO) problem. We develop PARL (Partition-Aware Reinforcement Learner), a topology generator that balances throughput, latency, and power. PARL-generated topologies reduce contention at the memory cut, meet SLAs, and cut worst-case slowdown to 1.2× while maintaining competitive mean throughput relative to linkrich meshes. Overall, this reframes NoI design for heterogeneous chiplet accelerators with workload-aware objectives.

Heterogeneous chiplet-based systems improve scaling by disaggregating CPUs/GPUs and emerging technologies (HBM/DRAM). However this on-package disaggregation introduces a latency in Network-on-Interposer (NoI). We observe that in modern large model inference, parameters and activations routinely move back and forth from HBM/DRAM, injecting large, bursty flows into the interposer. These memory-driven transfers inflate tail latency and violate Service Level Agreements (SLAs) across k-ary n-cube baseline NoI topologies. To address this gap we introduce an Interference Score (IS) that quantifies worst-case slowdown under contention. We then formulate NoI synthesis as a multi-objective optimization (MOO) problem. We develop PARL (Partition-Aware Reinforcement Learner), a topology generator that balances throughput, latency, and power. PARL-generated topologies reduce contention at the memory cut, meet SLAs, and cut worst-case slowdown to 1.2× while maintaining competitive mean throughput relative to linkrich meshes. Overall, this reframes NoI design for heterogeneous chiplet accelerators with workload-aware objectives.

Keywords: network-on-package, chiplets, Mixture-of-Experts, activation sparsity, sparse multicast, energy-efficiency

To read the full article, click here

Related Chiplet

- Interconnect Chiplet

- 12nm EURYTION RFK1 - UCIe SP based Ka-Ku Band Chiplet Transceiver

- Bridglets

- Automotive AI Accelerator

- Direct Chiplet Interface

Related Technical Papers

- Multi-Objective Hardware-Mapping Co-Optimisation for Multi-DNN Workloads on Chiplet-based Accelerators

- On hardware security and trust for chiplet-based 2.5D and 3D ICs: Challenges and Innovations

- SCAR: Scheduling Multi-Model AI Workloads on Heterogeneous Multi-Chiplet Module Accelerators

- Communication Characterization of AI Workloads for Large-scale Multi-chiplet Accelerators

Latest Technical Papers

- LaMoSys3.5D: Enabling 3.5D-IC-Based Large Language Model Inference Serving Systems via Hardware/Software Co-Design

- 3D-ICE 4.0: Accurate and efficient thermal modeling for 2.5D/3D heterogeneous chiplet systems

- Compass: Mapping Space Exploration for Multi-Chiplet Accelerators Targeting LLM Inference Serving Workloads

- Chiplet technology for large-scale trapped-ion quantum processors

- REX: A Remote Execution Model for Continuos Scalability in Multi-Chiplet-Module GPUs