Hemlet: A Heterogeneous Compute-in-Memory Chiplet Architecture for Vision Transformers with Group-Level Parallelism

By Cong Wang, Zexin Fu, Jiayi Huang, and Shanshi Huang

The Hong Kong University of Science and Technology (Guangzhou)

Abstract

Vision Transformers (ViTs) have established new performance benchmarks in vision tasks such as image recognition and object detection. However, these advancements come with significant demands for memory and computational resources, presenting challenges for hardware deployment. Heterogeneous compute-in-memory (CIM) accelerators have emerged as a promising solution for enabling energy-efficient deployment of ViTs. Despite this potential, monolithic CIM-based designs face scalability issues due to the size limitations of a single chip. To address this challenge, emerging chiplet-based techniques offer a more scalable alternative. However, chiplet designs come with their own costs, as they introduce more expensive communication through network-on-package (NoP) compared to network-onchip (NoC), which can hinder improvements in throughput.

Vision Transformers (ViTs) have established new performance benchmarks in vision tasks such as image recognition and object detection. However, these advancements come with significant demands for memory and computational resources, presenting challenges for hardware deployment. Heterogeneous compute-in-memory (CIM) accelerators have emerged as a promising solution for enabling energy-efficient deployment of ViTs. Despite this potential, monolithic CIM-based designs face scalability issues due to the size limitations of a single chip. To address this challenge, emerging chiplet-based techniques offer a more scalable alternative. However, chiplet designs come with their own costs, as they introduce more expensive communication through network-on-package (NoP) compared to network-onchip (NoC), which can hinder improvements in throughput.

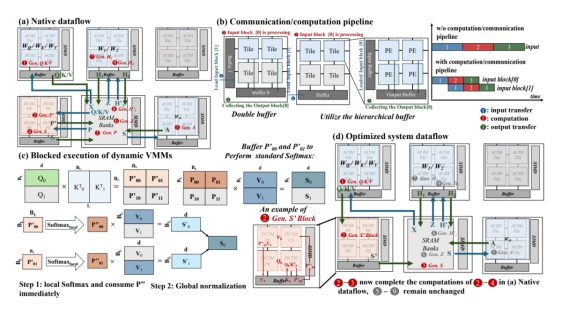

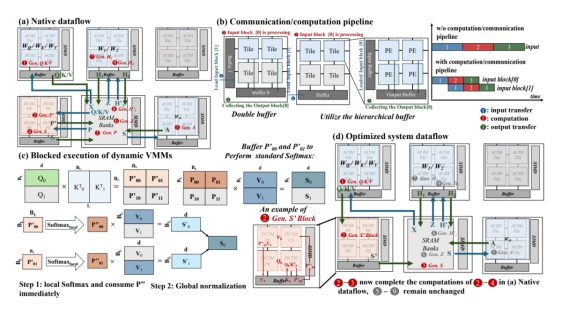

This work introduces Hemlet—a heterogeneous CIM chiplet system designed to accelerate ViT. Hemlet facilitates flexible resource scaling through the integration of heterogeneous analog CIM (ACIM), digital CIM (DCIM), and Intermediate Data Process (IDP) chiplets. To improve throughput while reducing communication overhead, it employs a group-level parallelism (GLP) mapping strategy and system-level dataflow optimization, achieving speedups ranging from 1.44× to 4.07× across various hardware configurations within the chiplet system. Our evaluation results demonstrate that Hemlet can achieve a throughput of 8.68 TOPS with an energy efficiency of 3.86 TOPS/W.

Index Terms—Compute-in-memory, Chiplet, Heterogeneous Computing, Mapping

To read the full article, click here

Related Chiplet

- Interconnect Chiplet

- 12nm EURYTION RFK1 - UCIe SP based Ka-Ku Band Chiplet Transceiver

- Bridglets

- Automotive AI Accelerator

- Direct Chiplet Interface

Related Technical Papers

- AIG-CIM: A Scalable Chiplet Module with Tri-Gear Heterogeneous Compute-in-Memory for Diffusion Acceleration

- A Heterogeneous Chiplet Architecture for Accelerating End-to-End Transformer Models

- AuthenTree: A Scalable MPC-Based Distributed Trust Architecture for Chiplet-based Heterogeneous Systems

- Resister: A Resilient Interposer Architecture for Chiplet to Mitigate Timing Side-Channel Attacks

Latest Technical Papers

- High-Efficient and Fast-Response Thermal Management by Heterogeneous Integration of Diamond on Interposer-Based 2.5D Chiplets

- HexaMesh: Scaling to Hundreds of Chiplets with an Optimized Chiplet Arrangement

- A physics-constrained and data-driven approach for thermal field inversion in chiplet-based packaging

- Probing the Nanoscale Onset of Plasticity in Electroplated Copper for Hybrid Bonding Structures via Multimodal Atomic Force Microscopy

- Recent Progress in Structural Integrity Evaluation of Microelectronic Packaging Using Scanning Acoustic Microscopy (SAM): A Review