Blackwell Shipments Imminent, Total CoWoS Capacity Expected to Surge by Over 70% in 2025, Says TrendForce

May 30, 2024 -- TrendForce reports that NVIDIA’s Hopper H100 began to see a reduction in shortages in 1Q24. The new H200 from the same platform is expected to gradually ramp in Q2, with the Blackwell platform entering the market in Q3 and expanding to data center customers in Q4. However, this year will still primarily focus on the Hopper platform, which includes the H100 and H200 product lines. The Blackwell platform—based on how far supply chain integration has progressed—is expected to start ramping up in Q4, accounting for less than 10% of the total high-end GPU market.

The die size of Blackwell platform chips like the B100 is twice that of the H100. As Blackwell becomes mainstream in 2025, the total capacity of TSMC’s CoWoS is projected to grow by 150% in 2024 and by over 70% in 2025, with NVIDIA’s demand occupying nearly half of this capacity. For HBM, the NVIDIA GPU platform’s evolution sees the H100 primarily using 80GB of HBM3, while the 2025 B200 will feature 288GB of HBM3e—a 3–4 fold increase in capacity per chip. The three major manufacturers’ expansion plans indicate that HBM production volume will likely double by 2025.

Microsoft, Meta, and AWS to be the first to adopt GB200

CSPs such as Microsoft, Meta, and AWS are taking the lead in adopting GB200 and their combined shipment volume is expected to exceed 30,000 racks by 2025. In contrast, Google is likely to prioritize expanding its TPU AI infrastructure. The Blackwell platform has been highly anticipated in the market because it differs from the traditional HGX or MGX single AI server approach. Instead, it promotes a whole-rack configuration, integrating NVIDIA’s own CPU, GPU, NVLink, and InfiniBand high-speed networking technologies. The GB200 is divided into two configurations: NVL36 and NVL72, with each rack featuring 36 and 72 GPUs, respectively. While NVL72 is the primary configuration that NVIDIA is pushing, the complexity of its design suggests that the NVL36 will be introduced first by the end of 2024 for initial trials to ensure a quick market entry.

AI server demand from tier-2 data centers and the government set to rise to 50% in 2024

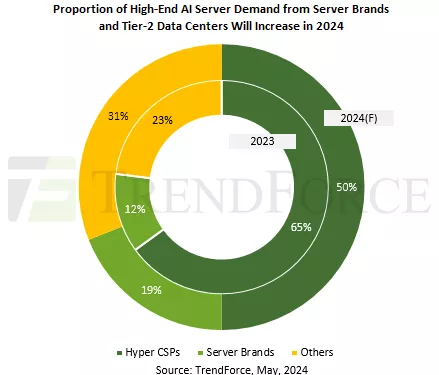

NVIDIA’s FY1Q25 data highlights a noticeable increase in demand from enterprise customers, sovereign AI clouds, and tier-2 data centers. TrendForce notes that in 2023, NVIDIA prioritized its high-end GPUs for hyper CSPs due to limited CoWoS and HBM supply. In 2023, CSPs accounted for approximately 65% of the global high-end AI server market demand.

With the supply of GPU easing in 1H24, demand from server brands like Dell and HPE will expand to 19%. Other sources, including tier-2 data centers such as CoreWeave and Tesla, as well as sovereign AI clouds and supercomputing projects, will see their combined demand share rise to 31%. This growth is driven by the sensitive and privacy-focused nature of AI training and applications. This segment is expected to play a crucial role in driving the growth of shipments of AI servers in the future.

For more information on reports and market data from TrendForce’s Department of Semiconductor Research, please click here, or email the Sales Department at SR_MI@trendforce.com

For additional insights from TrendForce analysts on the latest tech industry news, trends, and forecasts, please visit https://www.trendforce.com/news/

Related Chiplet

- Interconnect Chiplet

- 12nm EURYTION RFK1 - UCIe SP based Ka-Ku Band Chiplet Transceiver

- Bridglets

- Automotive AI Accelerator

- Direct Chiplet Interface

Related News

- Demand for NVIDIA’s Blackwell Platform Expected to Boost TSMC’s CoWoS Total Capacity by Over 150% in 2024

- GUC Taped Out UCIe 32G IP using TSMC's 3nm and CoWoS Technology

- How to Build a Better “Blackwell” GPU Than Nvidia Did

- Advancements in TSMC's CoWoS Technology to Enable Massive System-in-Packages by 2027

Latest News

- Where co-packaged optics (CPO) technology stands in 2026

- Qualcomm Completes Acquisition of Alphawave Semi

- Cadence Tapes Out UCIe IP Solution at 64G Speeds on TSMC N3P Technology

- Avnet ASIC and Bar-Ilan University Launch Innovation Center for Next Generation Chiplets

- SEMIFIVE Strengthens AI ASIC Market Position Through IPO “Targeting Global Markets with Advanced-nodes, Large-Die Designs, and 3D-IC Technologies”