A3D-MoE: Acceleration of Large Language Models with Mixture of Experts via 3D Heterogeneous Integration

By Wei-Hsing Huang, Janak Sharda, Cheng-Jhih Shih, Yuyao Kong, Faaiq Waqar, Pin-Jun Chen, Yingyan (Celine) Lin and Shimeng Yu - Georgia Institute of Technology

Abstract

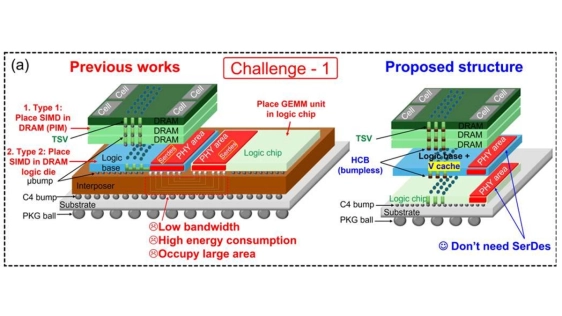

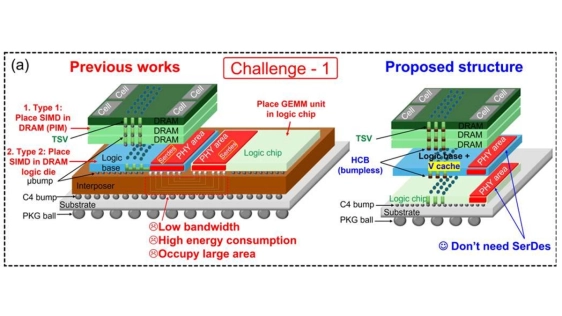

Conventional large language models (LLMs) are equipped with dozens of GB to TB of model parameters, making inference highly energy-intensive and costly as all the weights need to be loaded to onboard processing elements during computation. Recently, the Mixture-of-Experts (MoE) architecture has emerged as an efficient alternative, promising efficient inference with less activated weights per token. Nevertheless, fine-grained MoE-based LLMs face several challenges: 1) Variable workloads during runtime create arbitrary GEMV-GEMM ratios that reduce hardware utilization, 2) Traditional MoE-based scheduling for LLM serving cannot fuse attention operations with MoE operations, leading to increased latency and decreased hardware utilization, and 3) Despite being more efficient than conventional LLMs, loading experts from DRAM still consumes significant energy and requires substantial DRAM bandwidth. Addressing these challenges, we propose: 1) A3D-MoE, a 3D Heterogeneous Integration system that employs state-of-the-art vertical integration technology to significantly enhance memory bandwidth while reducing Network-on-Chip (NoC) overhead and energy consumption. 2) A 3D-Adaptive GEMV-GEMM-ratio systolic array with V-Cache efficient data reuse and a novel unified 3D dataflow to solve the problem of reduced hardware utilization caused by arbitrary GEMV-GEMM ratios from different workloads, 3) A Hardware resource-aware operation fusion scheduler that fuses attention operations with MoE operations to enhance hardware performance, and 4) MoE Score-Aware HBM access reduction with even-odd expert placement that reduces DRAM access and bandwidth requirements. Our evaluation results indicate that A3D-MoE delivers significant performance enhancements, reducing latency by a factor of 1.8x to 2x and energy consumption by 2x to 4x, while improving throughput by 1.44x to 1.8x compared to the state-of-the-art.

Conventional large language models (LLMs) are equipped with dozens of GB to TB of model parameters, making inference highly energy-intensive and costly as all the weights need to be loaded to onboard processing elements during computation. Recently, the Mixture-of-Experts (MoE) architecture has emerged as an efficient alternative, promising efficient inference with less activated weights per token. Nevertheless, fine-grained MoE-based LLMs face several challenges: 1) Variable workloads during runtime create arbitrary GEMV-GEMM ratios that reduce hardware utilization, 2) Traditional MoE-based scheduling for LLM serving cannot fuse attention operations with MoE operations, leading to increased latency and decreased hardware utilization, and 3) Despite being more efficient than conventional LLMs, loading experts from DRAM still consumes significant energy and requires substantial DRAM bandwidth. Addressing these challenges, we propose: 1) A3D-MoE, a 3D Heterogeneous Integration system that employs state-of-the-art vertical integration technology to significantly enhance memory bandwidth while reducing Network-on-Chip (NoC) overhead and energy consumption. 2) A 3D-Adaptive GEMV-GEMM-ratio systolic array with V-Cache efficient data reuse and a novel unified 3D dataflow to solve the problem of reduced hardware utilization caused by arbitrary GEMV-GEMM ratios from different workloads, 3) A Hardware resource-aware operation fusion scheduler that fuses attention operations with MoE operations to enhance hardware performance, and 4) MoE Score-Aware HBM access reduction with even-odd expert placement that reduces DRAM access and bandwidth requirements. Our evaluation results indicate that A3D-MoE delivers significant performance enhancements, reducing latency by a factor of 1.8x to 2x and energy consumption by 2x to 4x, while improving throughput by 1.44x to 1.8x compared to the state-of-the-art.

Keywords— Fine-grained MoE structures acceleration, 3D Heterogeneous Integration, Software-Hardware Co-Design

To read the full article, click here

Related Chiplet

- DPIQ Tx PICs

- IMDD Tx PICs

- Near-Packaged Optics (NPO) Chiplet Solution

- High Performance Droplet

- Interconnect Chiplet

Related Technical Papers

- Co-Optimization of Power Delivery Network Design for 3-D Heterogeneous Integration of RRAM-Based Compute In-Memory Accelerators

- Workflows for tackling heterogeneous integration of chiplets for 2.5D/3D semiconductor packaging

- Five Workflows for Tackling Heterogeneous Integration of Chiplets for 2.5D/3D

- Hecaton: Training and Finetuning Large Language Models with Scalable Chiplet Systems

Latest Technical Papers

- Spatiotemporal thermal characterization for 3D stacked chiplet systems based on transient thermal simulation

- Interconnect-Aware Logic Resynthesis for Multi-Die FPGAs

- Scope: A Scalable Merged Pipeline Framework for Multi-Chip-Module NN Accelerators

- Scaling Routers with In-Package Optics and High-Bandwidth Memories

- TDPNavigator-Placer: Thermal- and Wirelength-Aware Chiplet Placement in 2.5D Systems Through Multi-Agent Reinforcement Learning