Arteris’ Multi-Die Solution for the RISC-V Ecosystem

The amount of compute used to train frontier AI models has been doubling roughly every five to six months, but ‘Moore’s Law’, the observation that the number of transistors on a silicon wafer doubles every 2 years, is slowing as reducing transistor sizes becomes increasingly challenging. Low yield of large chips, the need for increased flexibility, high development costs on the latest processes, overall chip size limits and the need for process technology specialization is driving the industry towards chiplet-based solutions. Furthermore, the lesser-known Dennard scaling, the observation that power reduces with decreased transistor size ceased to be true many years ago resulting in power consumption becoming an additional challenge to scaling chip size and frequency. As a result, processor designers have resorted to multi-core designs as a scalable approach to increased performance and recently to multi-die systems. In shared memory multi-core systems, hardware support for cache coherency is crucial to ensure data consistency between caches and memory and to maintain high performance.

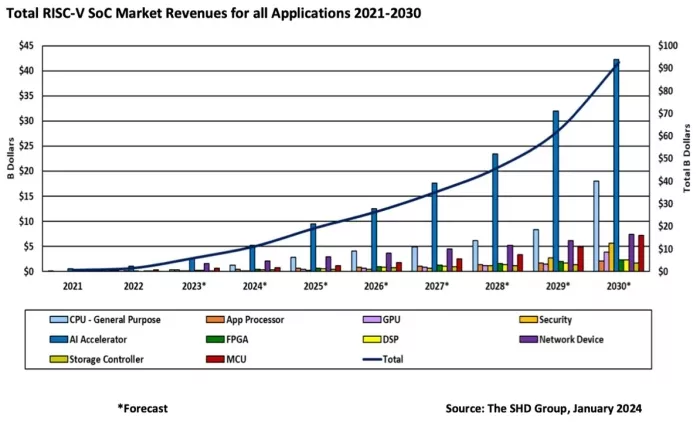

RISC-V processors have quickly progressed from open-source microcontrollers to advanced multi-core cache coherent clusters that compare favorably with the most advanced in the industry. They are increasingly being deployed in HPC, servers, and AI applications, which push today’s semiconductor technology to the limit. Chiplets enable the scaling of performance beyond that of a single die, can improve yield, reduce cost, and facilitate an optimal process technology choice. Today, chiplets ship in relatively low volumes and mostly from large integrated companies creating fully in-house solutions, but market forecasts show explosive growth of 42-75% CAGR (Compound Annual Growth Rate) in the use of chiplets to 2030 and beyond.

Projected Growth of RISC-V SoC Market Revenues by Application (2021–2030)

Arteris customers using RISC-V processors have employed die-to-die interconnects for some time, typically with non-coherent interconnects (such as Arteris’ FlexNoC) that transfer data from chiplet to chiplet by packetizing a standard interface such as AMBA and sending it over a die-to-die interface such as UCIe or BoW (Bunch or Wires). But in June 2025, Arteris introduced coherent die-to-die connectivity which adds support for cache coherency across multiple chiplets. Coherency is far more complex than non-coherent protocols because it maintains consistency across caches, which requires protocol messages to be sent back and forth between agents in the system, and in a multi-die system, that means between agents in different chiplets. This contrasts with the much simpler A to B transfer of data in a non-coherent interconnect.

Examples of Multi-die Configurations

Arteris’ Ncore cache coherent interconnect IP works with RISC-V CPUs that employ either the AMBA CHI or AMBA ACE coherent protocols, two of the most widely used coherent interfaces in the industry, which are increasingly being deployed by RISC-V vendors. The multi-die option for Ncore adds the ability to further extend coherency across multiple chiplets, supporting anywhere from 2 to 4 chiplets in either fully-connected or mesh topologies and from 1 to 4 UCIe links per die. Chiplets may be homogeneous, meaning all the same, or heterogeneous meaning different. Homogeneous chiplets enable scale up of performance by replicating the same chiplet multiple times whereas heterogeneous refers to chiplets that vary according to function and/or process, for example using different semiconductor processes for SRAM, high-voltage or RF. Ncore with the multi-die option can support either or both simultaneously with full coherency so long as an Ncore instance is present on each die. The Ncore configuration need not be identical on each chiplet. Non-coherent links may also be used for high bandwidth data transfer in applications such as AI accelerators.

“Chiplets demand advanced NoC IP to scale performance across multi-core and multi-die systems,” said Richard Wawrzyniak, The SHD Group. “Arteris solutions like Ncore and FlexNoC are key to unlocking bandwidth, coherency, and flexibility in next-gen AI and HPC designs.”

‘UCIe 1.1 Advanced’, which employs a silicon interposer (like a small PCB but fabricated in silicon with chip-making technology and is typically referred to as 2.5D multi-die) is used by Arteris Ncore with multi-die option and is the most widely adopted die-to-die interface in the industry, providing 64GB/s of bandwidth per link, so with up to 4 links Ncore can provide up to 256GB/s of bandwidth, however packetization and protocol overheads do reduce usable bandwidth. ‘UCIe 1.1 Standard’, which doesn’t require a silicon interposer but instead uses an organic substrate (and is referred to as 2D multi-die) is also supported but at ¼ the data rate of UCIe Advanced.

As multi-die systems become increasingly complex, management of the connectivity and CSRs (Control and Status Registers) of multiple chiplets within a chiplet assembly becomes increasingly challenging. Arteris Magillem Connectivity and Magillem Registers ease the management of partitioning and memory maps of entire assemblies of chiplets. Together with Arteris’ FlexNoC and FlexGen for non-coherent die-to-die interconnect and Ncore for coherent die-to-die interconnect they form a complete multi-die solution that enables flexible design scaling, faster time to silicon with wide standards and ecosystem support.

Chiplets are here to stay and Arteris has the technology to help companies innovate faster without risk. Learn more about Arteris products and solutions at arteris.com.

Related Chiplet

- Interconnect Chiplet

- 12nm EURYTION RFK1 - UCIe SP based Ka-Ku Band Chiplet Transceiver

- Bridglets

- Automotive AI Accelerator

- Direct Chiplet Interface

Related Blogs

- A Smarter Path To Chiplets Through An Enhanced Multi-Die Solution

- The Chiplet Economy: Three Pillars for Semiconductor Success

- Why 2023 Holds Big Promise for Multi-Die Systems

- Unleashing Die-to-Die Connectivity with the Alphawave Semi 3nm 24Gbps UCIe Solution

Latest Blogs

- Thermal Management in 3D-IC: Modeling Hotspots, Materials, & Cooling Strategies

- 3D Chips: Socionext Achieves Two Successful Tape-Outs in Just Seven Months

- Intel Foundry Collaborates with Partners to Drive an Open Chiplet Marketplace

- 3D-IC Packaging: Wafer Stacking, Hybrid Bonding, and Interposer/RDL Techniques

- Through-Silicon Vias (TSVs): Interconnect Basics, Design Rules, and Performance