Creating the Connectivity Required for AI Everywhere

By Tony Chan Carusone, CTO, Alphawave Semi

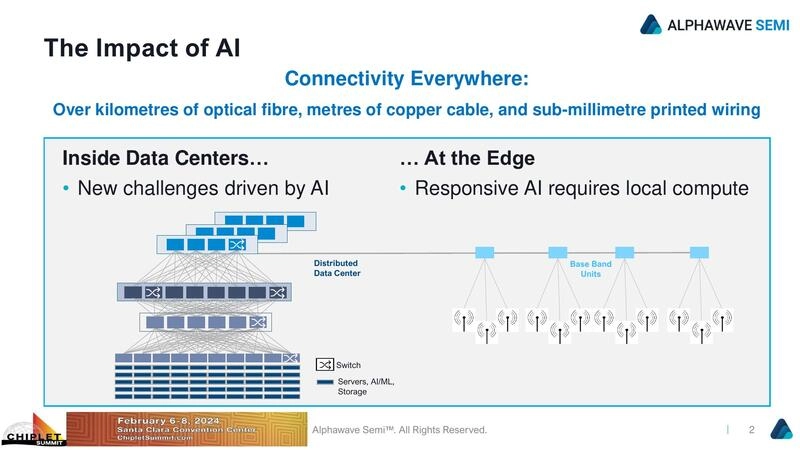

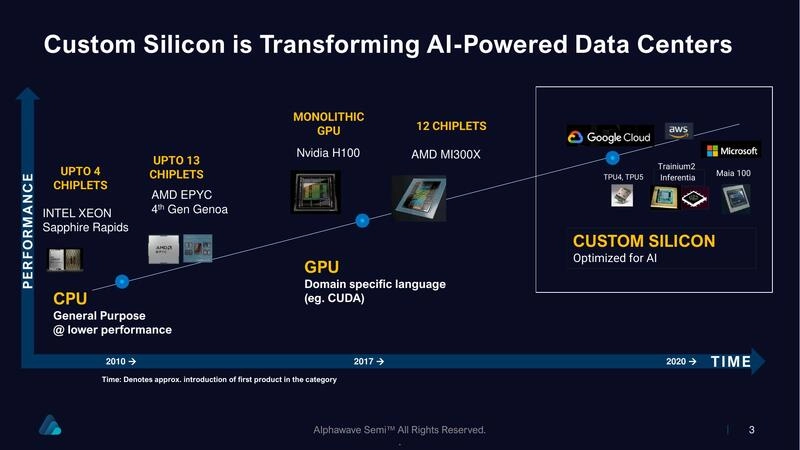

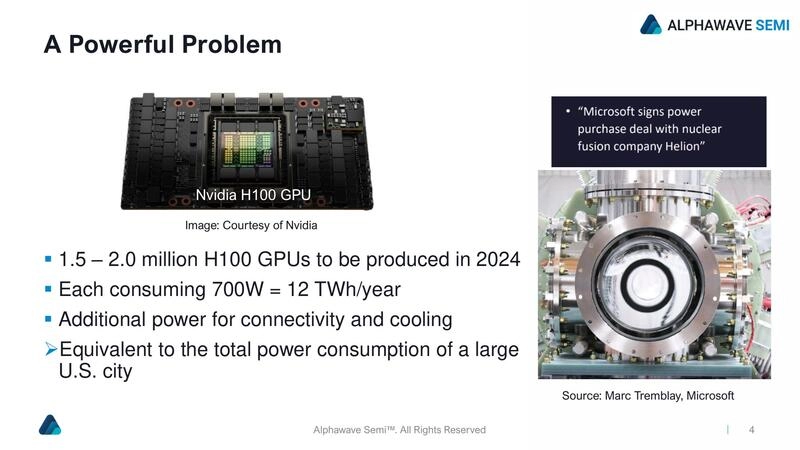

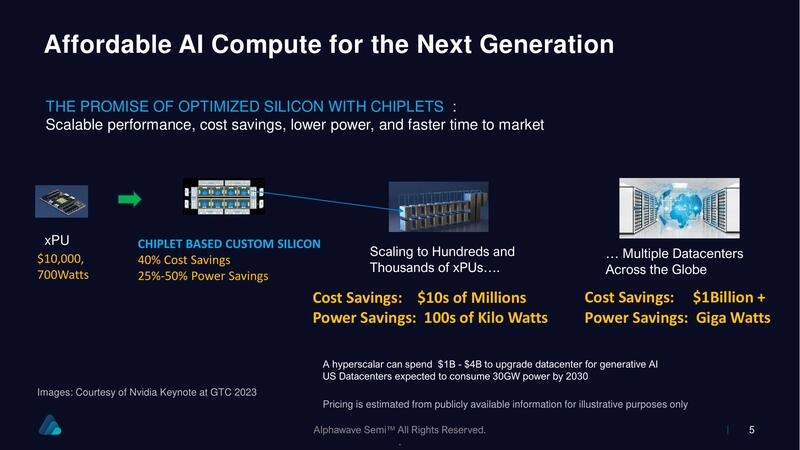

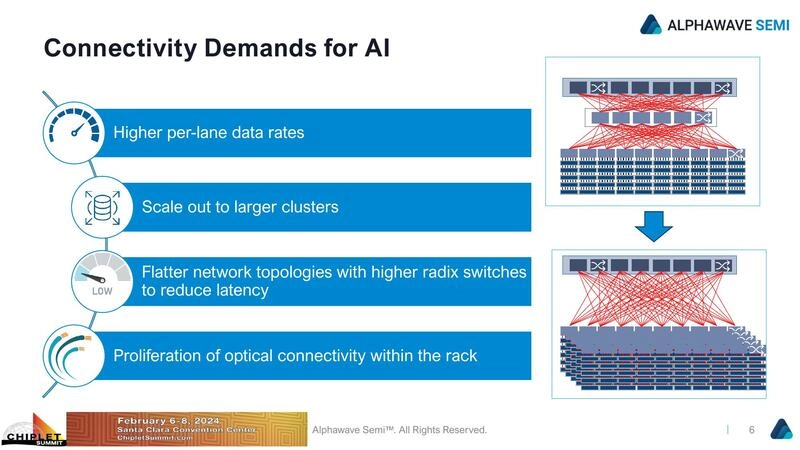

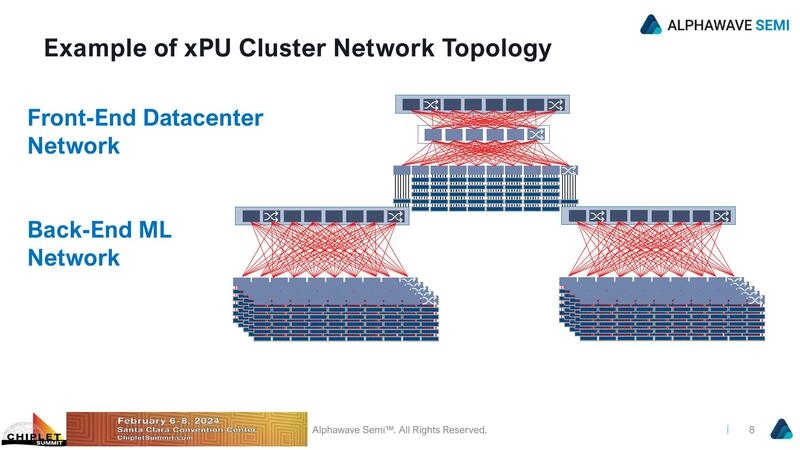

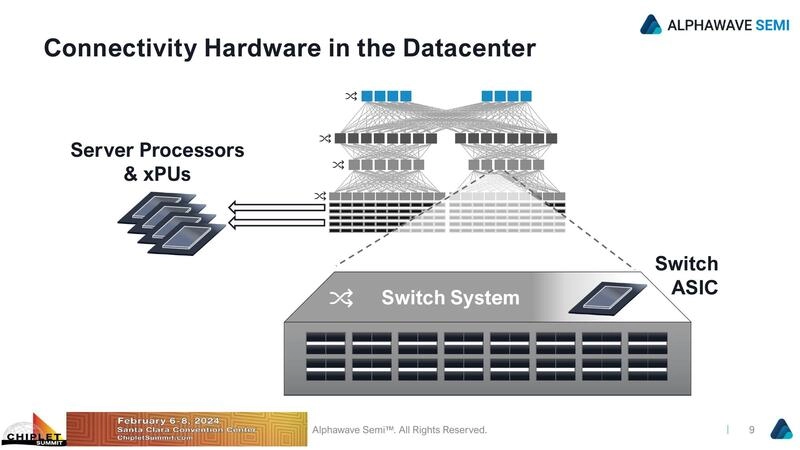

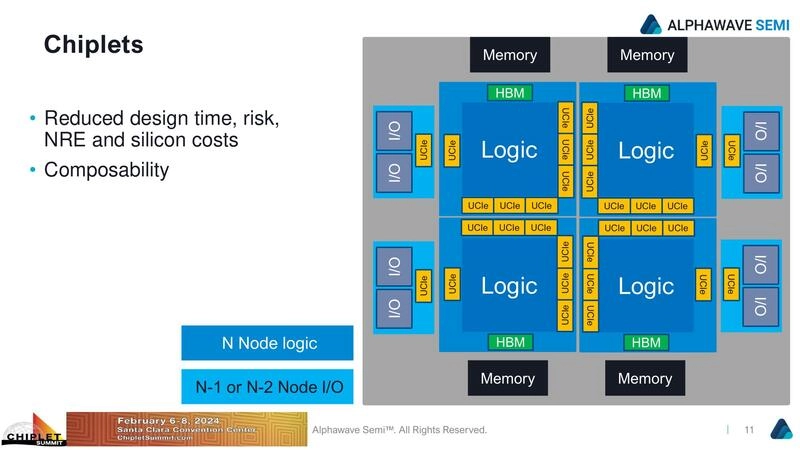

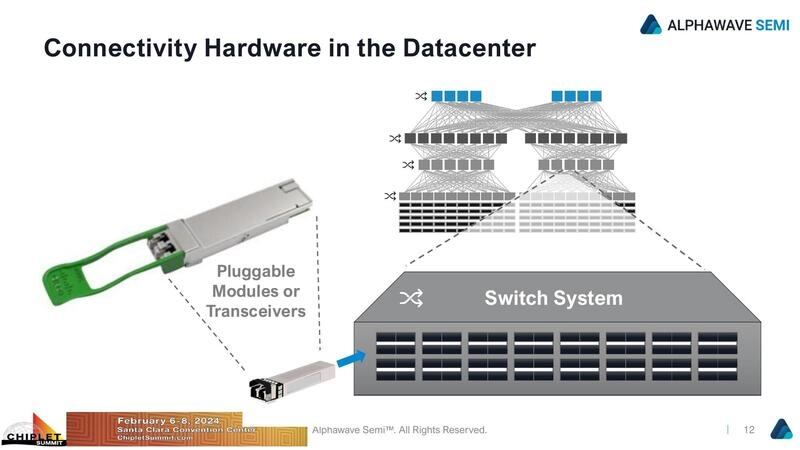

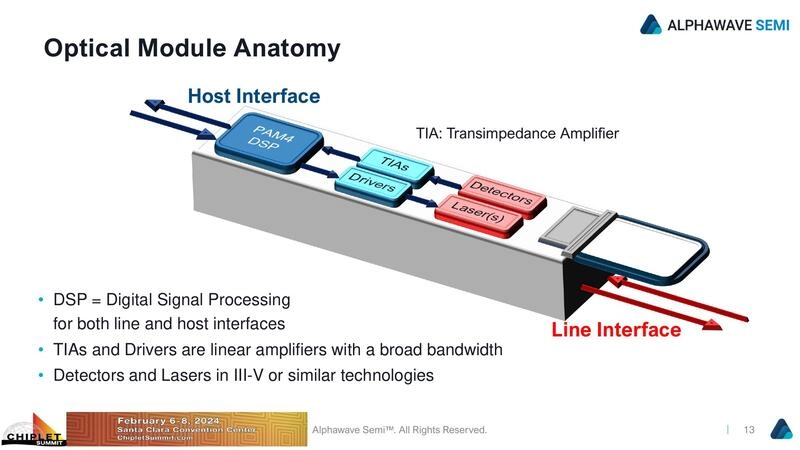

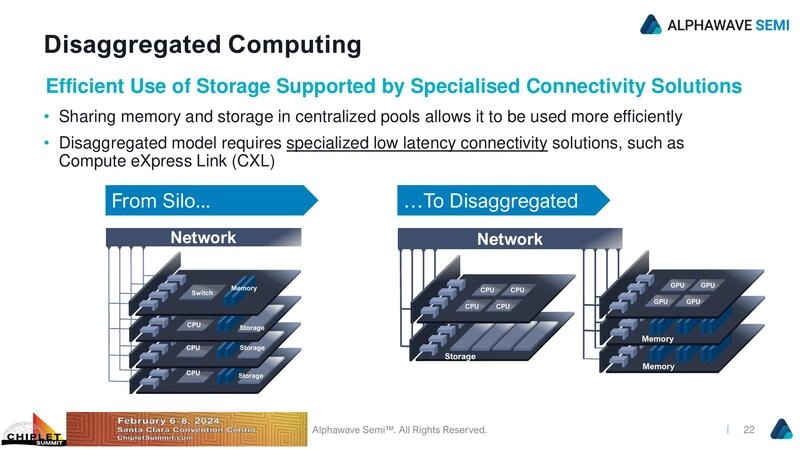

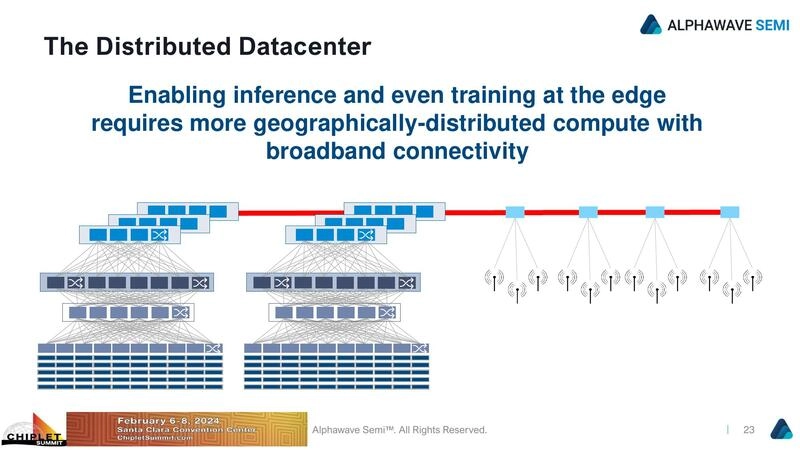

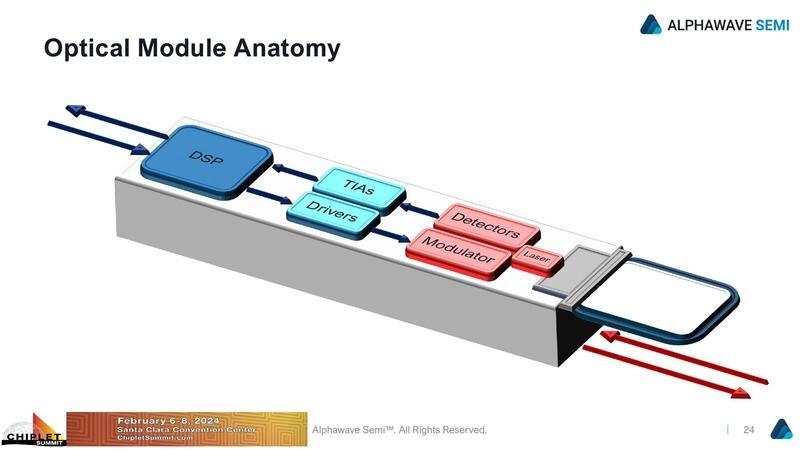

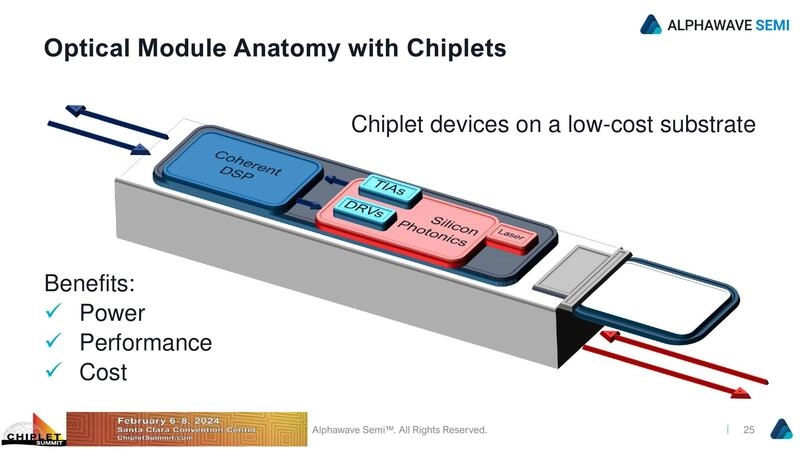

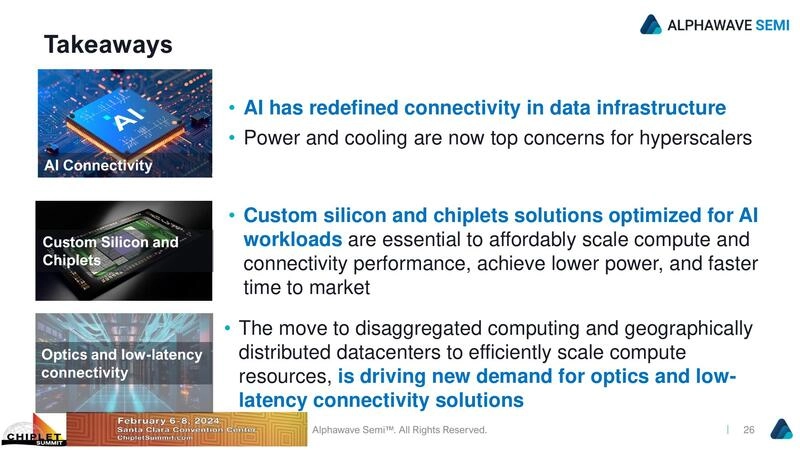

All major semiconductor companies now use chiplets for developing devices at leading-edge nodes. This approach requires a die-to-die interface within packages to provide very fast communications. Such an interface is particularly important for AI applications which are springing up everywhere, including both large systems and on the edge. AI requires high throughput, low latency, low energy consumption, and the ability to manage large data sets. The interface must handle needs ranging from enormous clusters requiring optical interconnects to portable, wearable, mobile, and remote systems that are extremely power-limited. It must also work with platforms such as the widely recognized ChatGPT and others that are on the horizon. The right interface with the right ecosystem is critical for the new world of AI everywhere.